This post is mainly addressed to all of my online friends, acquaintainces, contacts, etc etc. Whether we interact via Twitter, Facebook, Skype, e-mail, or some combination of these. (Everyone says I have to be on WhatsApp and Instagram too, but I already waste way too much time online as it is.) Of course, people I've never heard of are welcome to read and comment on this too.

These are strange times. Since about 24 June 2016 I have had this constant strange feeling of unease. It's faint, but real. And since 9 November 2016, it has become a bit less faint.

I don't think I've ever had any problems with mental health. That is, if I complete a measure of depression, I don't think I've never been at a point in my life where I scored above 0 on any of the items, and even then I only would have scored 1 on a couple. About once every three years I go through a little phase where I feel strangely lethargic for a couple of days (after controlling for hangovers), but that's about it.

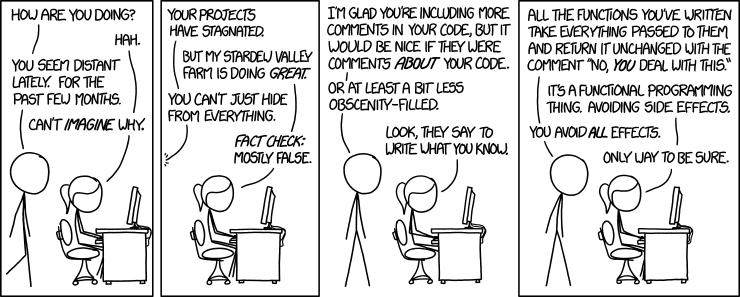

I just looked at the Beck Depression Inventory and today I scored 6 out of 63. Probably my highest ever, but I didn't score more than 1 on any item, and a score of 1 to 10 is classed as "These ups and downs are considered normal". So apparently I'm fine at that level. This sums up more like how I feel:

My "work", such as it is (I don't have a job that involves leaving the house and going to an office with a boss where I have to do stupid shit), involves quite a lot of being critical of other people's work. I try to do this in as civilised a way as possible. I prefer to write my critiques of scholarly work in the form of manuscripts that are at least intended for publication in journals (except when something really pisses me off and I dash off a blog post about it, which I usually regret shortly afterwards when it turns out I didn't do my due diligence). When you work this way, you need a valve to release the pressure, because it's very, very slow and tedious to work your way through a series of articles about how people consume pizza with almost innumerable statistical errors in them (shameless pimping of our new preprint there). For me, that valve is mostly Twitter, and sometimes Facebook. But that brings me back face to face with... well, the causes of "that feeling". 80% of the tweets in my feed, every second Facebook post seems to be about what the whole world (or at least, my blinkered, woolly-liberal(*) section of it) is talking about.

I'm starting to think that this feeling of unease may be affecting my interactions. People who used to be up for stupid, nerdy banter about stuff that doesn't matter seem to be a little bit more sensitive. Stuff doesn't get discussed that probably ought to. Or, perhaps worse, stuff that shouldn't be discussed does come up. I've witnessed people whose fundamental views on a particular question differ by about one hair's width from each other having fights --- well, not quite fights, but exchanges of snarkiness --- over utterly trivial details. People seem to be a little bit on edge. I find myself wondering if I ought to drop that bit of banter into a tweet when the only people who will read it are people I've been happily bantering with for a couple of years.

I have been wondering whether I'm alone in experiencing this "gnawing feeling" in the form of (what I presume is) low-level stress. Today, as I wondered whether to publish this draft (which I've been working on occasionally for a few days now, not that it shows from the quality of the writing), I saw that my occasional co-conspirator James Heathers --- for whom the words "irrepressibly upbeat" are normally a mere pastiche of an understatement --- seems to have been having something similar going on. So maybe it's not just me.

And I'm lucky. I'm white and male and all of the other things that place me above the midpoint of luck and privilege on every scale ever. Just after the US election result, I saw a tweet from a Black person that basically said, "Hey, liberal white folks. That feeling in your stomach right now? Welcome to our world, every day of our lives". So I'm conscious that this is probably just me having a whine about how I don't feel as good as I think I'm entitled to feel.

Currently I don't have many few ideas for cheering myself up. Silly, over-the-top prog-rock wigouts work a bit, for a few moments. My slow acquisition of the documents I need to apply for Irish nationality provided a couple of moments of light relief last Friday, as one certificate arrived in the post and I got e-mail confirmation that another was on its way. But these are small consolations.

Anyway, back to the first paragraph (all the professional writers seem to have learned at writing school that you have to finish with a quirky point that ties back into your first quirky point). To my online friends, acquaintances, etc: If I am being "differently annoying" right now --- i.e., not in the normal "Nick, we get it, just shut up now" way :-) --- then I apologise, but things are, well, not normal.

PS: Normally I allow comments on my posts, but it doesn't feel right in this case. That seems to fit in with my theme here. Heh.

(*) I don't think I'm very political. I mean, yes, I don't like racism, and I think that multinationals probably ought to pay more tax, and the state in some countries should probably help poor people more, but I do find a lot of "progressive" ideas to be just sloganising. I think that there are real biological differences between the sexes, and I don't think want it to be impossible to start a business because you might make a lot of money from it. I just wish the view was better from on top of this pile of fences.

These are strange times. Since about 24 June 2016 I have had this constant strange feeling of unease. It's faint, but real. And since 9 November 2016, it has become a bit less faint.

I don't think I've ever had any problems with mental health. That is, if I complete a measure of depression, I don't think I've never been at a point in my life where I scored above 0 on any of the items, and even then I only would have scored 1 on a couple. About once every three years I go through a little phase where I feel strangely lethargic for a couple of days (after controlling for hangovers), but that's about it.

I just looked at the Beck Depression Inventory and today I scored 6 out of 63. Probably my highest ever, but I didn't score more than 1 on any item, and a score of 1 to 10 is classed as "These ups and downs are considered normal". So apparently I'm fine at that level. This sums up more like how I feel:

My "work", such as it is (I don't have a job that involves leaving the house and going to an office with a boss where I have to do stupid shit), involves quite a lot of being critical of other people's work. I try to do this in as civilised a way as possible. I prefer to write my critiques of scholarly work in the form of manuscripts that are at least intended for publication in journals (except when something really pisses me off and I dash off a blog post about it, which I usually regret shortly afterwards when it turns out I didn't do my due diligence). When you work this way, you need a valve to release the pressure, because it's very, very slow and tedious to work your way through a series of articles about how people consume pizza with almost innumerable statistical errors in them (shameless pimping of our new preprint there). For me, that valve is mostly Twitter, and sometimes Facebook. But that brings me back face to face with... well, the causes of "that feeling". 80% of the tweets in my feed, every second Facebook post seems to be about what the whole world (or at least, my blinkered, woolly-liberal(*) section of it) is talking about.

I'm starting to think that this feeling of unease may be affecting my interactions. People who used to be up for stupid, nerdy banter about stuff that doesn't matter seem to be a little bit more sensitive. Stuff doesn't get discussed that probably ought to. Or, perhaps worse, stuff that shouldn't be discussed does come up. I've witnessed people whose fundamental views on a particular question differ by about one hair's width from each other having fights --- well, not quite fights, but exchanges of snarkiness --- over utterly trivial details. People seem to be a little bit on edge. I find myself wondering if I ought to drop that bit of banter into a tweet when the only people who will read it are people I've been happily bantering with for a couple of years.

I have been wondering whether I'm alone in experiencing this "gnawing feeling" in the form of (what I presume is) low-level stress. Today, as I wondered whether to publish this draft (which I've been working on occasionally for a few days now, not that it shows from the quality of the writing), I saw that my occasional co-conspirator James Heathers --- for whom the words "irrepressibly upbeat" are normally a mere pastiche of an understatement --- seems to have been having something similar going on. So maybe it's not just me.

And I'm lucky. I'm white and male and all of the other things that place me above the midpoint of luck and privilege on every scale ever. Just after the US election result, I saw a tweet from a Black person that basically said, "Hey, liberal white folks. That feeling in your stomach right now? Welcome to our world, every day of our lives". So I'm conscious that this is probably just me having a whine about how I don't feel as good as I think I'm entitled to feel.

Currently I don't have many few ideas for cheering myself up. Silly, over-the-top prog-rock wigouts work a bit, for a few moments. My slow acquisition of the documents I need to apply for Irish nationality provided a couple of moments of light relief last Friday, as one certificate arrived in the post and I got e-mail confirmation that another was on its way. But these are small consolations.

Anyway, back to the first paragraph (all the professional writers seem to have learned at writing school that you have to finish with a quirky point that ties back into your first quirky point). To my online friends, acquaintances, etc: If I am being "differently annoying" right now --- i.e., not in the normal "Nick, we get it, just shut up now" way :-) --- then I apologise, but things are, well, not normal.

PS: Normally I allow comments on my posts, but it doesn't feel right in this case. That seems to fit in with my theme here. Heh.

(*) I don't think I'm very political. I mean, yes, I don't like racism, and I think that multinationals probably ought to pay more tax, and the state in some countries should probably help poor people more, but I do find a lot of "progressive" ideas to be just sloganising. I think that there are real biological differences between the sexes, and I don't think want it to be impossible to start a business because you might make a lot of money from it. I just wish the view was better from on top of this pile of fences.